ARTOS and the corresponding undergraduate thesis of Björn Barz was recently awarded with the prize of the town Jena for the best applied thesis.

1. What is ARTOS?

ARTOS is the Adaptive Real-Time Object Detection System created at the Computer Vision Group of the University of Jena (Germany) by Björn Barz during a research project consulted by Erik Rodner. It was inspired by (Goering et al., ICRA, 2014) and the related system developed at UC Berkeley and UMass Lowell.

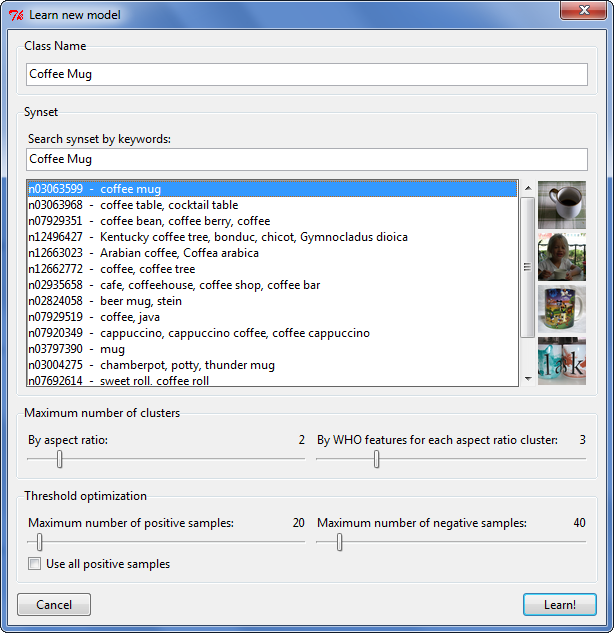

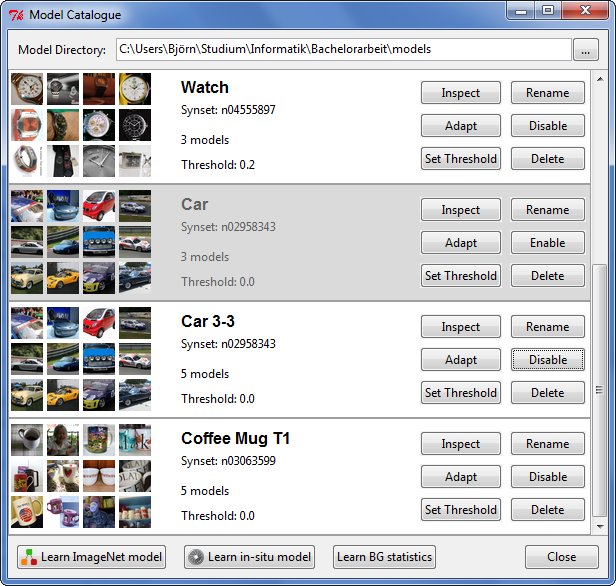

It can be used to quickly learn models for visual object detection without having to collect a set of samples manually. To make this possible, it uses ImageNet, a large image database with more than 20,000 categories. It provides an average of 300-500 images with bounding box annotations for more than 3,000 of those categories and, thus, is suitable for object detection.

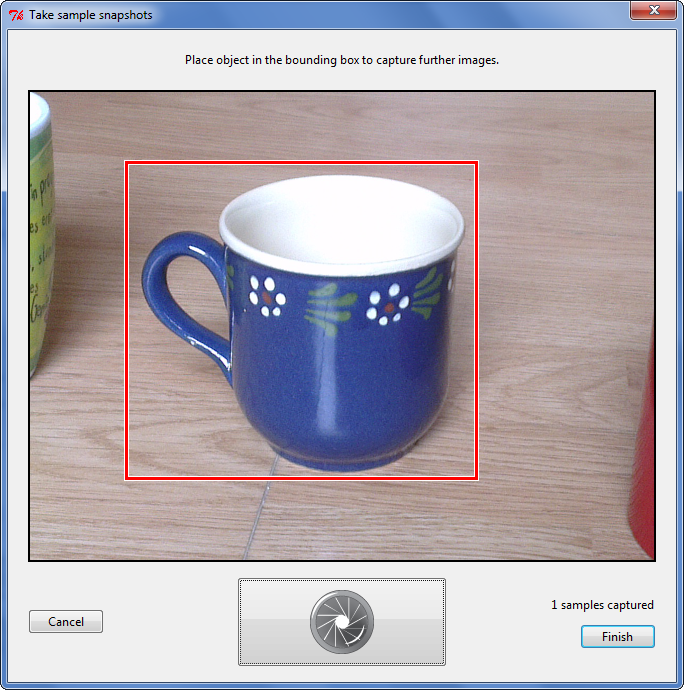

The purpose of ARTOS is not limited to using those images in combination with clustering and a technique called Whitened Histograms of Orientations (WHO, Hariharan et al.) to quickly learn new models, but also includes adapting those models to other domains using in-situ images and applying them to detect objects in images and video streams.

ARTOS consists of two parts: A library (libartos) which provides all the functionality mentioned above. It is implemented

in C++, but exports the important functions with a C-style procedural interface in addition to allow usage of the library

with a wide range of programming languages and environments.

The other part is a Graphical User Interface (PyARTOS), written in Python, which allows performing the operations of ARTOS

in a comfortable way.

Please note: ARTOS is still work-in-progress. This is a first release, which still lacks some functionality we will add later. Also, there is a chance to face some bugs.

2. Dependencies

libartos

The ARTOS C++ library incorporates a modified version of the Fast Fourier Linear Detector (FFLD) [v1] for DPM detection and the Eigen Library [v3.1] for the linear algebra stuff. Both are already bundled with ARTOS.

In addition, the following 3-rd party libraries are required by libartos:

- libfftw3f

- libjpeg

- libxml2

- OpenMP (optional, but strongly recommended)

PyARTOS

The Python graphical user interface to ARTOS requires Python version 2.7 or higher. It has been designed to work with Python 2.7 as well with Python 3.2 or later.

PyARTOS has been tested successfully with Python 2.7.6, Python 3.3.4 and Python 3.4.0.

The following Python modules are required:

-

Tkinter:

The Python interface to Tk.

It is bundled with Python on Windows.

On Unix, search for a package named python-tk or python3-tk. -

PIL (>= 1.1.6):

The Python Imaging Library.-

Python 2:

- Packages: python-imaging and python-imaging-tk

- Binaries for Win32: http://www.pythonware.com/products/pil/index.htm

-

Python 3 and Python 2 64-bit:

Since PIL isn't being developed anymore and, thus, not available for Python 3, the Pillow fork can be used as a drop-in replacement.- Packages: python3-imaging and python3-imaging-tk

- Inofficial Pillow binaries: http://www.lfd.uci.edu/~gohlke/pythonlibs/#pillow

-

Python 2:

-

At least one of the following modules used for accessing video devices:

- Unix:

- python-opencv

- pygame: http://www.pygame.org/download.shtml

- Windows: VideoCapture (>= 0.9-5): http://videocapture.sourceforge.net/

- Unix:

Note that neither python-opencv nor VideoCapture are available for Python 3 until now (May 2014).

Anyway, adding support for a new or another video capturing module can be done easily by adding a new camera abstraction class to the PyARTOS.Camera sub-package.

3. Building the library

Building libartos requires CMake and a C++ compiler. It has been successfully built using the GNU C++ Compiler. Other compilers may be supported too, but have not been tested.

To build libartos on Unix, run the following from the ARTOS root directory:

mkdir bin

cd bin

cmake ../src/

make

This will create a new binary directory, search for the required 3-rd party libraries, generate a makefile and execute it.

To build libartos on Windows, use the CMake GUI to create a MinGW Makefile and to set up the paths to the 3-rd party libraries appropriately.

4. Setting up the environment

The use of the ImageNet image repository is an essential part of the ARTOS-workflow.

Hence, before the first use of ARTOS, you need to download:

- a (full) copy of the ImageNet image data for all synsets

This requires an account on ImageNet. Registration can be done here: http://www.image-net.org/signup

After that, there should be a Tar archive with all full-resolution images available for download (> 1 TB). -

the bounding box annotation data for those synsets

Can be downloaded as Tar archive from the following URL (no account required): http://image-net.org/Annotation/Annotation.tar.gz -

a synset list file, listing all available synsets and their descriptions

There is a Python script available in the ARTOS root directory, which does this for you. It will download the list of synsets which bounding box annotations are available for and will convert it to the appropriate format. Just run:

That will createpython fetch_synset_wordlist.pysynset_wordlist.txt.

- Create a new directory, where your local copy of ImageNet will reside.

- Put the

synset_wordlist.txtjust inside of that directory. - Create 2 sub-directories:

ImagesandAnnotation - Unpack the images Tar file to the

Imagesdirectory, so that it contains one tar file for each synset. - Unpack the annotations Tar file to the

Annotationdirectory, so that it contains one tar file for each synset. If those archives are compressed (gzipped), decompress them by runninggzip -d -r .

5. Launching the ARTOS GUI

After you've built libartos as described in (3), installed all required Python modules mentioned in (2) and made up your local copy of ImageNet as described in (4), you're ready to go! From the ARTOS root directory run:

python launch-gui.py

On the first run, it will show up a setup dialog which asks for the directory to store the learned models in and for the path to your local copy of ImageNet. It may also ask for the path to libartos, but usually that will be detected automatically.

Note that the first time you run the detector or learn a new model, it will be very slow, since the FFTW library will collect information about your system and store it in a file called wisdom.fftw to speed up fourier transformations.

Have fun!

6. License and credits

If you use ARTOS for research purpose, you need to cite the corresponding arXiv report:

@article{DBLP:journals/corr/Barz14ART,

author = {Bj{\"o}rn Barz and

Erik Rodner and

Joachim Denzler},

title = {ARTOS -- Adaptive Real-Time Object Detection System},

journal = {CoRR},

volume = {abs/1407.2721},

year = {2014},

ee = {http://arxiv.org/abs/1407.2721}

url = {http://cvjena.github.io/artos/}

}

ARTOS is released under the GNU General Public License (version 3). You should have received a copy of the license text along with ARTOS.

The icons used in the PyARTOS GUI were created by different authors listed below. None of them is connected to ARTOS or the University of Jena in any way.

- Model Catalogue: PICOL - http://www.picol.org [Creative Commons (Attribution-Share Alike 3.0 Unported)]

- Camera: Visual Pharm - http://icons8.com/ [Creative Commons Attribution-No Derivative Works 3.0 Unported]

- Images (Batch Detections): Ionicons - http://ionicons.com/ [MIT License]

- Settings: Webalys - http://www.webalys.com/minicons

- Quit: Danilo Demarco - http://www.danilodemarco.com/

- Camera Shutter: Marc Whitbread - http://www.25icons.com [Creative Commons Attribution 3.0 - United States (+ Attribution)]